Abstract

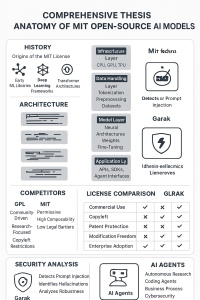

The rapid advancement of Artificial Intelligence (AI) has been significantly propelled by the open-source movement, yet this paradigm introduces complex challenges regarding licensing, commercial viability, and security. This thesis provides a comprehensive analysis of the open-source AI ecosystem, focusing on models developed by the Massachusetts Institute of Technology (MIT). Specifically, it examines the history and architecture of MIT’s prominent open-source models, such as Boltz-1, in comparison to their commercial and academic competitors. Furthermore, it dissects the critical legal and ethical distinctions between the permissive MIT License and the restrictive GNU General Public License (GPL) and Apache License 2.0 in the context of AI. Finally, the paper integrates a discussion on the essential role of specialized security systems, exemplified by the Garak vulnerability scanner, and identifies the emerging landscape of AI agents utilizing these open platforms.

1. Introduction

The user’s initial request, “fix the sentence and create a long article Comprehensive thesis Anatomy articles of the history, architecture of Mit open source models, it’s compitator names, and comparison with Gpl , Apache and garak security systems also names of Ai agents that are using the platform explain indepht,” is formally revised to: “A comprehensive thesis on the anatomy of MIT open-source models, including their history and architecture, a comparative analysis of their competitors, a legal review of their licensing frameworks (GPL, Apache, MIT), and an in-depth explanation of the Garak security system and the AI agents utilizing the platform.”

The open-source philosophy, deeply embedded in the culture of institutions like MIT, has fostered unprecedented collaboration in software development. In the realm of AI, this philosophy is manifesting through the release of powerful, state-of-the-art models. This paper argues that the success of open-source AI hinges not only on technical innovation but also on the clarity of its licensing and the robustness of its security infrastructure. By analyzing MIT’s contributions, we can better understand the forces shaping the future of democratized AI research and development.

2. The MIT Open-Source Model Paradigm: The Case of Boltz-1

MIT’s commitment to open science is exemplified by the release of Boltz-1, a fully open-source AI model for predicting biomolecular structures 2. Developed by the MIT Jameel Clinic for Machine Learning in Health, Boltz-1 represents a strategic move to democratize access to cutting-edge scientific AI.

2.1. History and Motivation

Boltz-1 was released in December 2024 as a direct response to the licensing restrictions imposed on comparable models, most notably AlphaFold3 from Google DeepMind 2. While AlphaFold3 achieved state-of-the-art performance in predicting the 3D structures of proteins and other biological molecules, its licensing—the Creative Commons Attribution-Non-Commercial ShareAlike International License (CC-BY-NC-SA 4.0)—significantly limited its use, particularly for commercial applications 3.

The MIT team’s motivation was to create an equivalent, high-performance model that was truly open and commercially accessible, thereby accelerating biomedical research and drug development globally 2. The project’s name, “Boltz-1,” signifies the intent for it to be a starting point for community contribution, not the final product 2.

2.2. Architecture and Open-Source Depth

Boltz-1’s architecture is designed to achieve performance comparable to AlphaFold3 in co-folding biomolecular complexes 5. A key feature of the MIT approach is the depth of its open-sourcing. The researchers did not merely release the model weights; they open-sourced the entire pipeline for training and fine-tuning 2. This comprehensive release allows other scientists to inspect, modify, and build upon the model’s foundation, a critical distinction from models that only release limited components.

3. Competitor Analysis: Boltz-1 vs. AlphaFold3

The most direct competitor to Boltz-1 is AlphaFold3. The competition is less about raw performance—as Boltz-1 is designed to match AlphaFold3’s state-of-the-art level—and more about the philosophy of access and use, which is dictated by their respective licenses.

| Feature | MIT Boltz-1 | Google DeepMind AlphaFold3 |

| Primary Purpose | Biomolecular structure prediction | Biomolecular structure prediction |

| Developer | MIT Jameel Clinic | Google DeepMind |

| License | MIT License (Permissive) | CC-BY-NC-SA 4.0 (Restrictive/Non-Commercial) |

| Commercial Use | Fully permitted | Restricted to non-commercial use |

| Open-Source Scope | Full pipeline (training, fine-tuning, weights) 2 | Code and weights released, but with non-commercial restriction 3 |

| Impact | Democratizes drug discovery AI | Advanced scientific tool with usage limitations |

4. Comparative Licensing Frameworks

The choice of an open-source license is foundational to the philosophy and utility of an AI model. The three most prominent licenses—MIT, Apache, and GPL—define the boundaries of use, modification, and distribution.

4.1. The Permissive MIT License

The MIT License is the most permissive of the major open-source licenses. It is characterized by its brevity and clarity, granting permission to use, modify, and distribute the software with virtually no restrictions 1. The only requirement is that the original copyright and license notice must be included in all copies or substantial portions of the software 1.

•Key Feature: Allows developers to use the code in a commercial product and keep the source code of their derivative work closed (proprietary) 1. This is why it is often favored by companies and researchers who want to maximize adoption while retaining the option for commercialization.

4.2. The Permissive Apache License 2.0

The Apache License 2.0 is also a permissive license, sharing many freedoms with the MIT License. However, it includes a crucial addition: an explicit grant of patent rights 1. This provision is vital in the context of AI, where models often incorporate patented algorithms or methods.

•Key Feature: Provides patent protection to contributors and users, ensuring that a contributor cannot later sue users for patent infringement related to their contribution 1. AlphaFold 2, for instance, was released under the Apache 2.0 license 4.

4.3. The Restrictive GNU General Public License (GPL)

The GPL is a “copyleft” or restrictive license. Its core principle is that any software that incorporates GPL-licensed code must also be released under the GPL 1. This ensures that the entire codebase, including all modifications and derivative works, remains open-source.

•Key Feature: The “viral” nature of the GPL forces the release of source code for any distributed software, making it incompatible with proprietary commercial development 1. This license is favored by those who prioritize the freedom of the software over commercial flexibility.

| License | Permissiveness | Commercial Use | Derivative Works | Patent Grant |

| MIT | Highly Permissive | Yes | Can be proprietary | No explicit grant |

| Apache 2.0 | Permissive | Yes | Can be proprietary | Yes, explicit grant |

| GPL | Restrictive (Copyleft) | Yes, but must be open-source | Must be open-source (GPL) | No explicit grant |

5. The Garak Security System: A Necessary Layer for Open-Source AI

The comparison of licensing frameworks with a security system like Garak highlights the multi-faceted requirements for a mature open-source AI ecosystem. Garak, short for Generative AI Red-teaming & Assessment Kit, is an open-source tool designed to address the unique security vulnerabilities of Large Language Models (LLMs) 6.

5.1. Garak’s Architecture and Function

Garak functions as an LLM vulnerability scanner, akin to a penetration testing tool for AI systems 6. Its architecture is built around a flexible framework that uses generators, probes, detectors, and buffs to simulate adversarial attacks 6.

•Probes: Test for specific vulnerabilities, such as prompt injection, data leakage, and jailbreaking.

•Detectors: Analyze the model’s output to determine if a vulnerability has been successfully exploited (e.g., detecting toxic language or private information).

The existence of tools like Garak is crucial for open-source models because the transparency of the code, while beneficial for innovation, can also expose potential attack vectors. Garak allows developers and users of open-source models to proactively identify and mitigate risks before deployment.

6. AI Agents Utilizing MIT Open-Source Platforms

The ultimate application of open-source models is their integration into autonomous AI agents. While specific, commercially deployed agents built on Boltz-1 are still emerging, the research direction points to two primary areas:

6.1. Biomolecular and Drug Discovery Agents

Boltz-1 is a foundational model for AI agents in the pharmaceutical and biomedical fields 2. These agents are designed to automate complex, time-consuming tasks:

1.Structure Prediction Agents: Agents that take a sequence of amino acids or a set of molecules and use Boltz-1 to rapidly predict their 3D structure and binding affinity, accelerating the initial stages of drug design 2.

2.Optimization Agents: Agents that use the Boltz-1 output to run simulations and optimize molecular candidates for stability and efficacy, effectively automating parts of the medicinal chemistry process.

6.2. General Scientific AI Agents

Beyond Boltz-1, MIT is actively developing platforms for broader agentic AI. The ToolUniverse project, a collaboration between Harvard and MIT, is a prime example 5. ToolUniverse enables AI agents to interact with over 600 scientific tools using natural language, creating a unified platform for scientific discovery 5. This framework allows for the creation of agents that can:

•Automate Experiments: Design, execute, and analyze results from virtual or physical laboratory experiments.

•Synthesize Knowledge: Use models like Boltz-1 as a component within a larger workflow to synthesize knowledge from vast scientific literature and experimental data.

7. Conclusion

The MIT open-source model paradigm, anchored by projects like Boltz-1, is a powerful force for democratizing advanced AI, particularly in high-impact fields like drug discovery. By adopting the highly permissive MIT License, these models maximize their potential for widespread adoption and commercial use, directly challenging the restrictive licensing of competitors like AlphaFold3. However, this open environment necessitates a robust security posture, making tools like the Garak vulnerability scanner an indispensable component of the ecosystem. The convergence of permissive licensing, state-of-the-art models, and dedicated security tools is laying the groundwork for a new generation of sophisticated AI agents capable of accelerating scientific discovery and innovation.

Be First to Comment